Create

one single master repository and configure the topology in following way

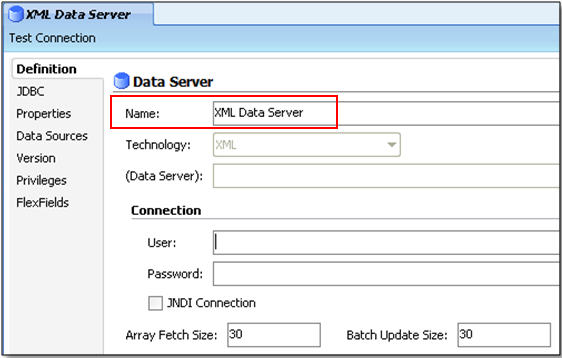

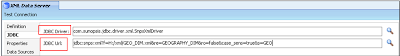

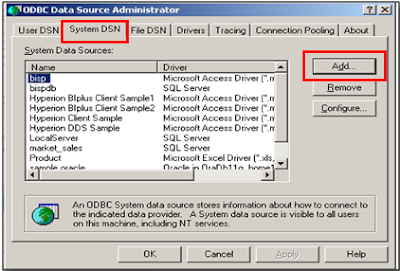

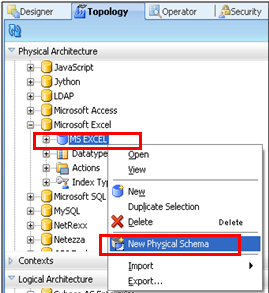

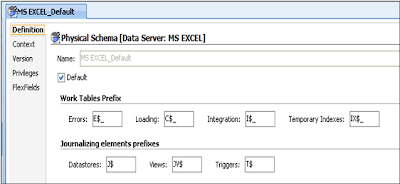

- Create the physical architecture which has all your DEV/SIT/UAT/PROD servers configured. This will contain your actual data servers, schema, agents etc/

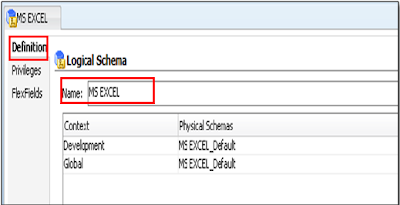

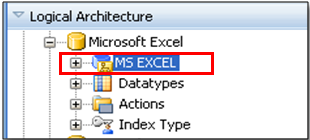

- Create the logical architecture which contain only one set of logical schema which will be use to point to different physical architecture based on the context.

- Now create diferent context say - DEV, SIT, UAT and PROD and point the logical schema to appropriate physical schema in the context mapping screen.

ii) Create different work repository for each phase

- One schema for DEV which a development work repository and where all the design and development happens.

- Another schema for UAT which is a execution work repository where the scenarios are pushed after being successfully tested from DEV work repository

- A execution work repository in your production database which has the final tested, tried scenario from the UAT and ready for deployment into production

To deploy either we create a package to automate the workflow where scenario are generated from the UAT/DEV boxes and deployed on the PROD execution work repository using ODI tool like ODIImportObject, ODI Generatel scenarios etc.

Other way is to export the generate the scenario in DEV, export the scenario from Designer screen or command line into XML format and then import the scenario to different work repostory using command line or ODI GUI. For command line ODI has provided import/export JAVA API which you can find in the ODI Tool Reference Guide.

- Create the physical architecture which has all your DEV/SIT/UAT/PROD servers configured. This will contain your actual data servers, schema, agents etc/

- Create the logical architecture which contain only one set of logical schema which will be use to point to different physical architecture based on the context.

- Now create diferent context say - DEV, SIT, UAT and PROD and point the logical schema to appropriate physical schema in the context mapping screen.

ii) Create different work repository for each phase

- One schema for DEV which a development work repository and where all the design and development happens.

- Another schema for UAT which is a execution work repository where the scenarios are pushed after being successfully tested from DEV work repository

- A execution work repository in your production database which has the final tested, tried scenario from the UAT and ready for deployment into production

To deploy either we create a package to automate the workflow where scenario are generated from the UAT/DEV boxes and deployed on the PROD execution work repository using ODI tool like ODIImportObject, ODI Generatel scenarios etc.

Other way is to export the generate the scenario in DEV, export the scenario from Designer screen or command line into XML format and then import the scenario to different work repostory using command line or ODI GUI. For command line ODI has provided import/export JAVA API which you can find in the ODI Tool Reference Guide.